How To Build a Custom Chatbot Using LangChain With Examples

Imagine a world where technology doesn’t just inform you, it engages with you. Where a digital companion walks alongside you, offering insightful advice, answering your questions, and even anticipating your needs. This isn’t a scene from a futuristic sci-fi flick; it’s the dawn of a new era in artificial intelligence, one where custom chatbots built with frameworks like LangChain are blurring the lines between machine and meaningful connection.

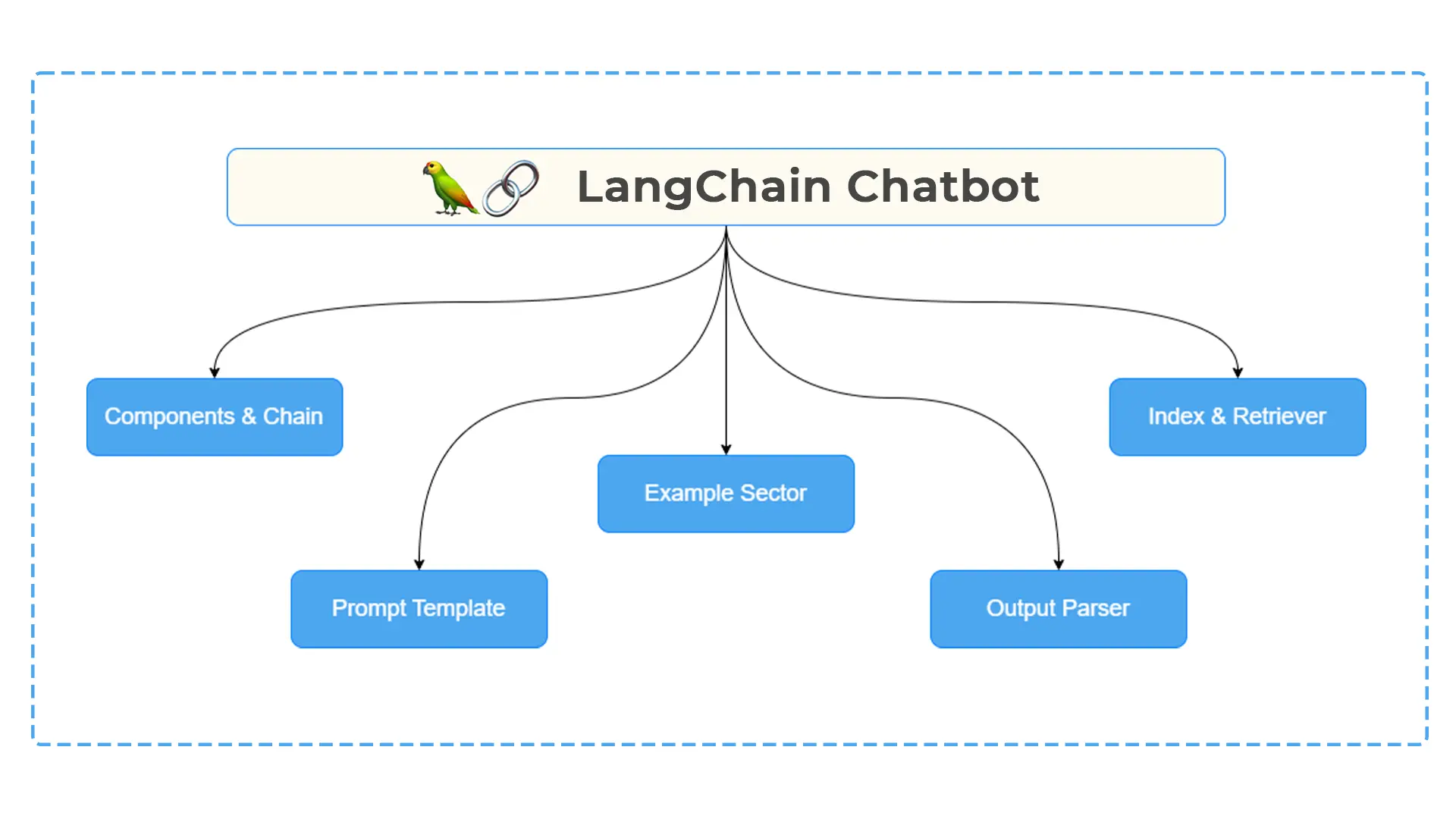

But what is LangChain, you ask? Think of it as your virtual workshop, a set of tools and building blocks specifically designed for crafting captivating chatbots. Unlike pre-programmed scripts, Langchain empowers you to define the conversation flow, inject human-like nuance into your chatbot’s responses, and connect it to the real world through external data and APIs. It’s the bridge between your creative vision and the powerful capabilities of Large Language Models (LLMs), allowing you to shape a digital Michelangelo of conversation.

Gone are the days of robotic, pre-programmed dialogs. With LangChain, you can create multi-step interactions, integrate external knowledge sources, and even imbue your chatbot with memory, fostering a sense of familiarity and genuine connection with your users. Whether you’re a seasoned developer or a curious entrepreneur, this blog will be your guide, unveiling the secrets of LangChain and empowering you to build your very own AI-powered conversational companion.

We have written an in-depth blog on LangChain that you can read here: What is LangChain?

In the upcoming sections, we’ll delve deep into the practicalities of building your LangChain chatbot, equipping you with the knowledge and tools to bring your vision to life. We have also created our chatbot using LangChain, which you can view and test from here. Let’s begin building!

Note: All the examples in this blog are using LangChainJS, connected with OpenAI API, and Pinecone.

To install next.js, you would have to do the following:

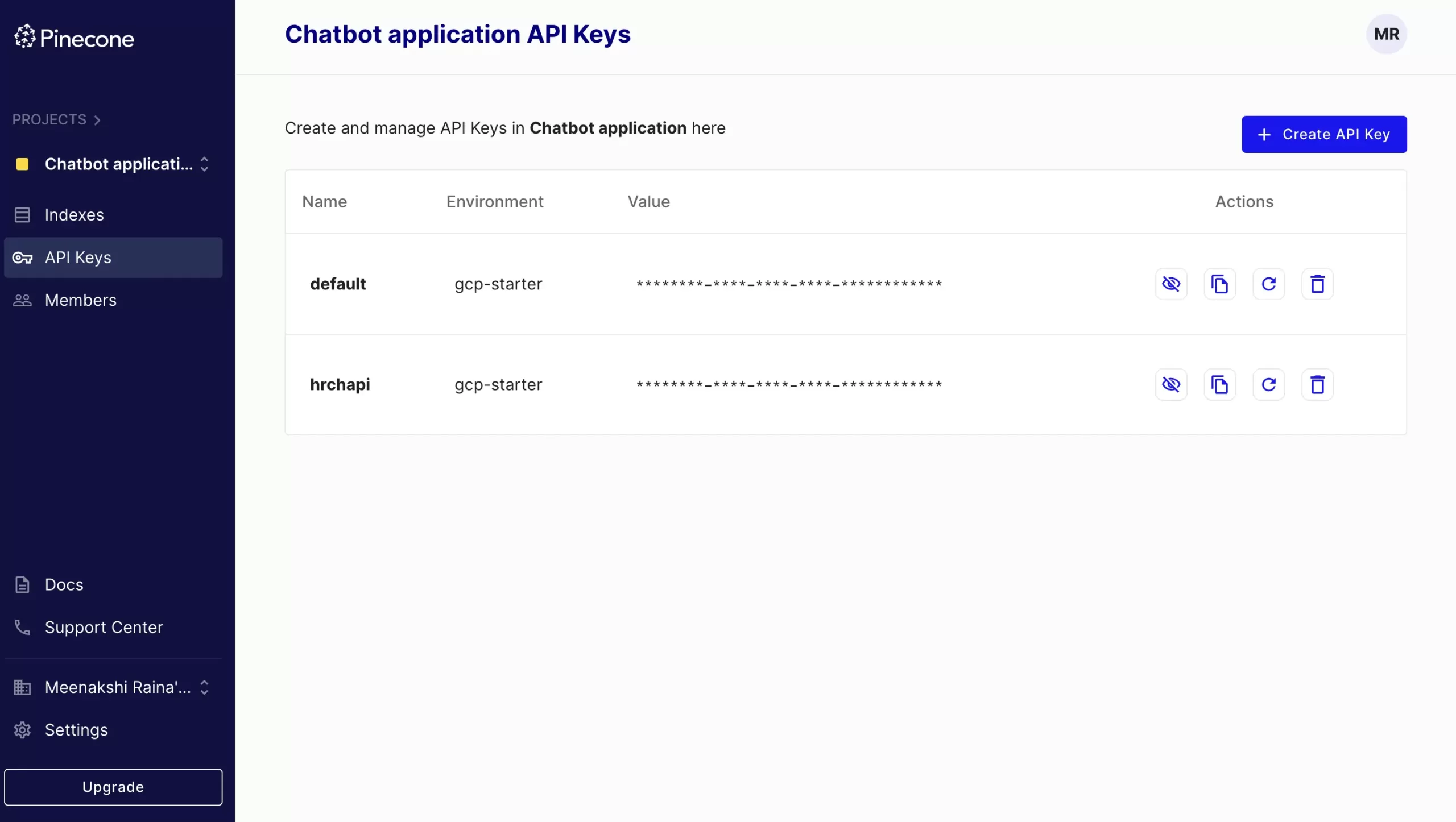

To setup Pinecone Index sign in with your details to login at https://www.pinecone.io

Click on “Get Started”.

Fill in your Name, Goal, Purpose of index, and preferred coding language.

- Now Create an index with an

- Configure your index with dimensions 1536 as specified for a vector database.

- Select your storage type or Pod type , default being starter.

Now create an API key for the pinecone Index by entering the API key name. Once the API key has been created it will be shown as your project application API key.

Connect the index to your application using the code generated by the pinecone app according to your specifications.

To get started, install LangChain with the following command:

LangChain can be used in Vercel/Next.js. Next.js supports using LangChain in frontend components, Serverless functions and Edge functions. It can be imported using the following syntax:

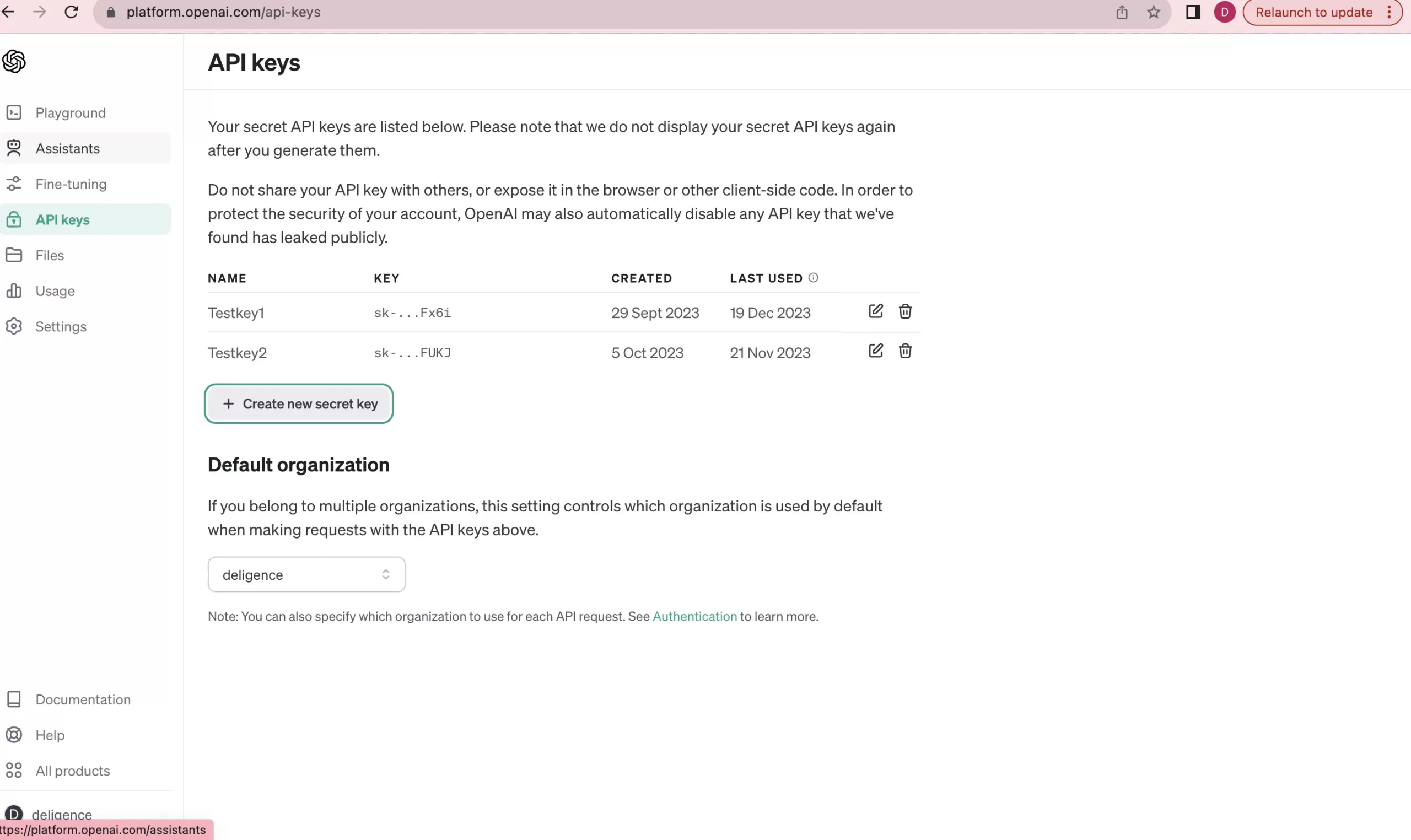

- The OpenAI API uses API keys for authentication. To access the OpenAI key, make an account on the OpenAI platform. Go to API keys and Generate API key with the option :

Create new secret key

- Setup the OpenAI API key in .env file(environment file) in next.js as:

Make the changes in next.config.js by inserting this line of code as an object

Wherever this api key is to be used , use it as process.env.OPENAI_API_KEY

Prompt Templates in LangChain are powerful tools that help you build customized prompts for your large language models (LLMs) with ease and flexibility. Instead of crafting individual prompts for every specific case, you define a template once with placeholders. Then, you simply “fill in the blanks” with different values to create unique prompts on the fly. Placeholders can be any kind of data, like strings, numbers, dictionaries, or even data classes. This lets you personalize prompts based on user queries, context, or other information. Think of Prompt Templates in LangChain as Lego blocks for building instructions for your language model. You create reusable templates with empty slots, then plug in different words, sentences, or even whole blocks of data to fit the task at hand.

Imagine you’re writing a story but want your model to fill in missing details. Here’s an example Prompt Template:

Then, when you need a new story, you just fill in the blanks:

Now, your model has all the base information to write a unique fairy tale about Pip the talking mouse and the lost princess!

In a LangChain chatbot, Prompt Templates are your secret weapon for crafting dynamic and engaging responses.

Imagine a simple FAQ chatbot. Here’s a template to handle user questions:

Usage:

When a user asks a question, you analyze it to extract the topic (question_topic). Then, you populate the template with the extracted topic and the corresponding answer details retrieved based on that topic. This generates a personalized and informative response for the user.

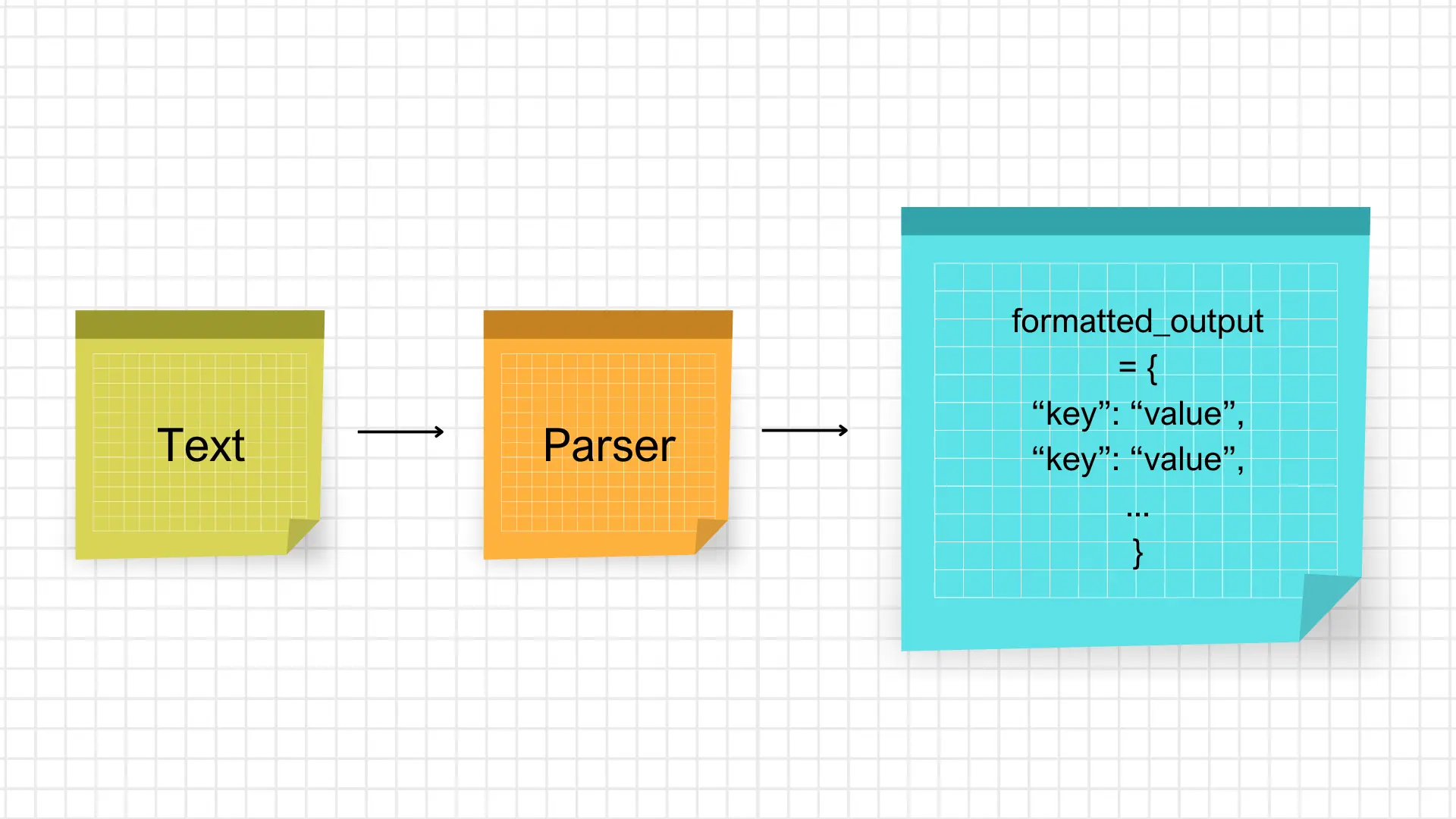

Output parsers are your trusty companions for taming the raw text generated by large language models (LLMs). Imagine you ask your LLM to write a poem, but instead of a beautiful verse, you get a jumbled mess of words. That’s where output parsers come in. They take the LLM’s output and transform it into a more structured and useful format. This could be JSON data, Python data classes, Database rows or Custom formats.

StringOutputParser: Converts raw text from LLM to text.

StructuredOutputParser: Converts text into JSON objects based on predefined schemas.

PydanticOutputParser: Generates Python data classes from the LLM’s output.

EnumOutputParser: Parses text into predefined categories or values.

DateTimeOutputParser: Extracts date and time information from the generated text.

Basically, the Prompt template sets the course for the LLM’s journey, while the output parser refines the destination and makes it readily available for your use.

Here is an example of StringOutputParser transforming the raw LLM/ChatModel output into a consistent string format:

In LangChain, a stop sequence is a specific string or pattern that, when encountered in the model’s generated text, signals the model to stop generating further output. It serves as a control mechanism to prevent unnecessary text generation, manage model responses, and ensure efficient use of resources. Stop sequences can be pre-programmed into prompts or inserted by the LLM to trigger parsing at specific points, ensuring relevant data extraction. By effectively utilizing stop sequences, you can gain more control over the model’s text generation process, ensuring concise, relevant, and efficient responses in your LangChain applications.

By strategically incorporating stop sequences, you can refine your chatbot’s interactions, ensure concise and relevant responses, manage task completion, prevent unwanted content, and enhance its overall effectiveness in LangChain applications.

Function Schema in LangChain defines the structure and expected input/output format of functions handled by your large language model (LLM). It acts as a roadmap for developers interacting with the LLM, specifying what it can do and how to utilize its capabilities effectively.

Function names and descriptions

Clearly identify the functionality each function offers and its intended purpose.

Input parameters

Define the expected data types and required/optional parameters for feeding information to the model. This could include text prompts, dialogue history, or specific data structures.

Output format

Specify the format of the LLM’s response, whether it’s text generation, structured data, or something else. Data types like JSON or custom formats can be defined here.

Additional details

Depending on the model and language used, further information can be included like:

- Error codes and handling mechanisms.

- Authentication requirements for accessing specific functions.

- Versioning information for different model variants.

Clarity and understanding

Developers have a clear understanding of the LLM’s capabilities and how to interact with it effectively.

Reduced errors

Specifying input/output formats minimizes confusion and prevents invalid requests or unexpected responses.

Improved documentation

Schema documentation serves as a valuable reference for developers and users unfamiliar with the LLM’s functionality.

Standardized integration

Enables easier integration of the LLM with external systems and applications through consistent data formats.

Side Note:

Visit the OpenAI API documentation (https://beta.openai.com/docs/api-reference) to explore the available functions and their schemas.

In LangChain, a runnable sequence refers to a specific type of component used to orchestrate your conversational AI flow. It essentially tells LangChain “what to do next” during a user interaction. Runnable sequence can also be defined as a series of components chained together to perform a specific task or process. These components can be:

LLMs (Large Language Models)

Generating text, translating languages, writing different kinds of creative content.

Vector stores

Storing and retrieving text or code embeddings for efficient comparison and retrieval.

Output parsers

Extracting structured data from LLM outputs like facts, emotions, or specific details based on defined schema.

Components

Custom functions or pre-built components specific to your needs.

Each component in the sequence takes the output of the previous one as its input, forming a chain of operations. This allows you to orchestrate complex workflows involving multiple LLM calls, data processing, and custom logic.

In this sequence, the first Runnable is an object with two properties: ‘context’ and ‘question’. The ‘context’ property is a function that retrieves and formats documents and the ‘question’ property is a new instance of RunnablePassthrough, which simply passes its input to the next Runnable.

The output of each Runnable is merged with the inputs and passed to the next Runnable in the sequence. This allows each Runnable to use only the inputs it needs and pass all inputs to the next Runnable. This design pattern helps to avoid code duplication and makes the code more modular and easier to maintain.

RunnableMap refers to a powerful tool for executing multiple components in parallel and collecting their outputs as a single map. Imagine you have a team of assistants, each specializing in different tasks. RunnableMap empowers you to send them instructions simultaneously and gather their completed work in a neat, organized manner.

Runnable Map basically allows you to execute multiple Runnables in parallel, and to return the output of these Runnables as a map.

Function

RunnableMap is a built-in component within LangChain.

Behavior

It takes an object as input, where each key points to a separate runnable component or function. RunnableMap then runs all these components concurrently and assembles their outputs into a single map, with each key-value pair corresponding to the original key and the executed component’s output.

Parallel execution

You can significantly speed up your workflows by running multiple tasks simultaneously instead of sequentially.

Independent processing

Each component operates independently, allowing for efficient handling of diverse tasks.

Organized results

The output map neatly structures the results, making it easy to access and utilize the generated data.

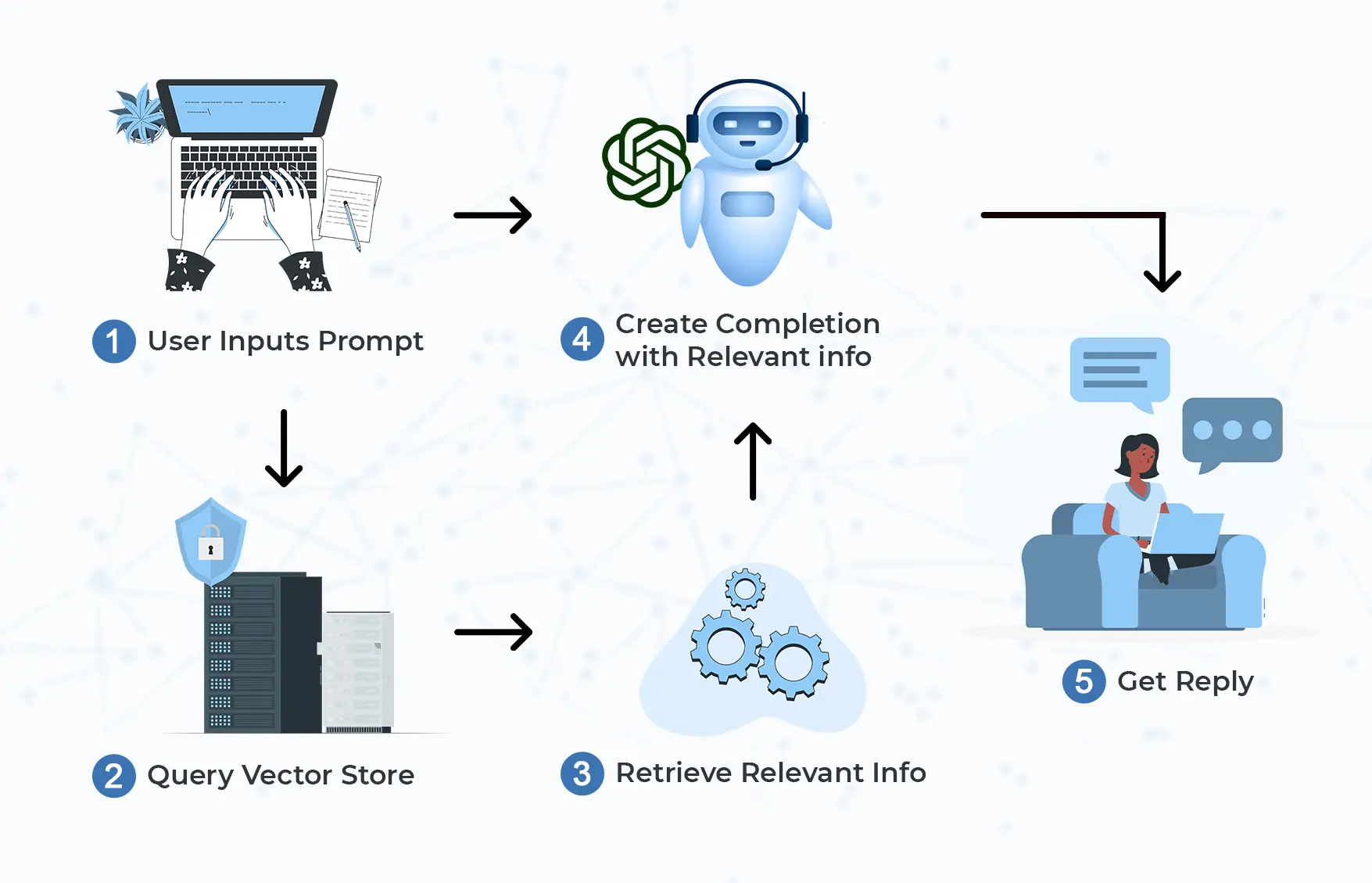

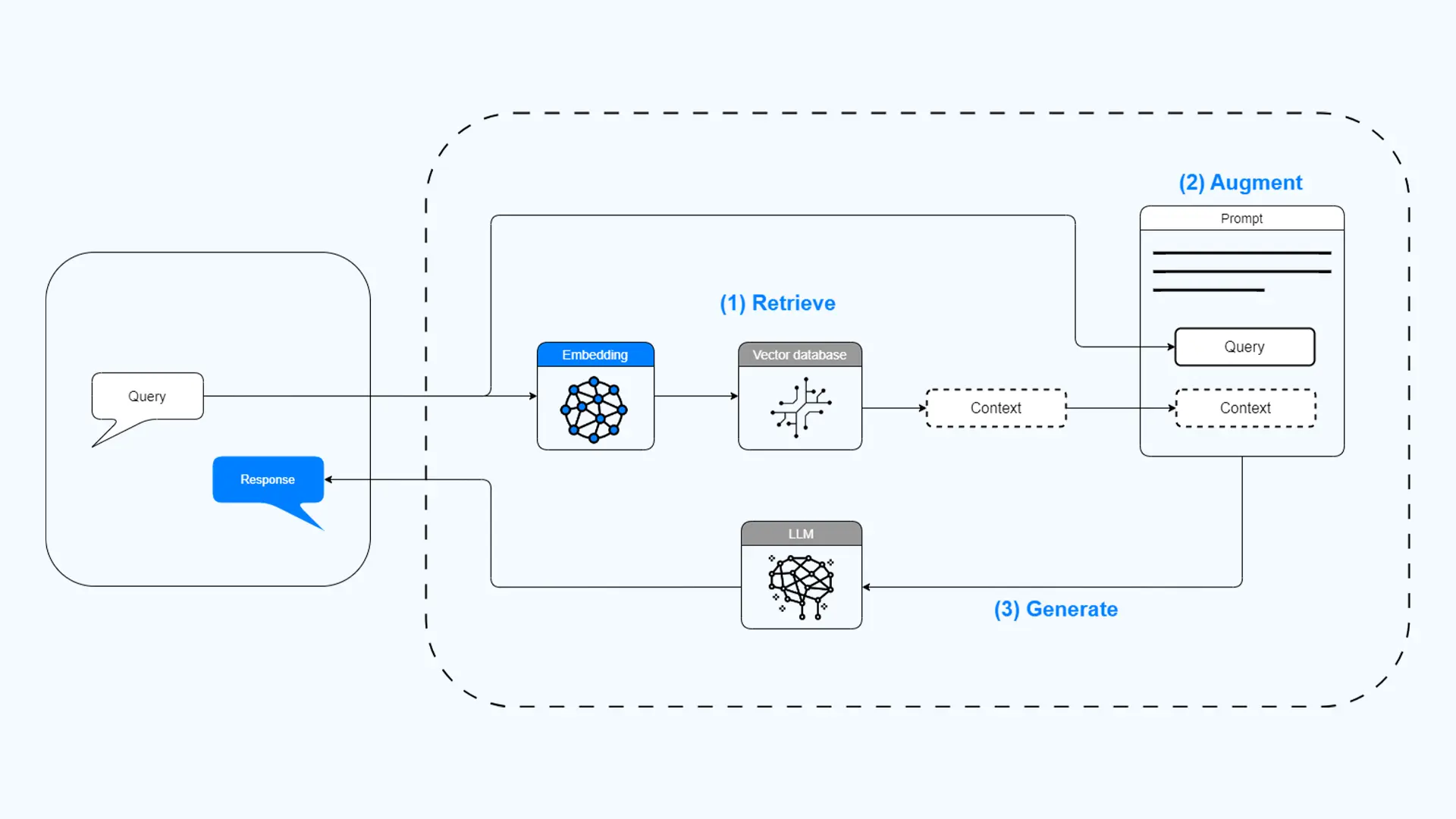

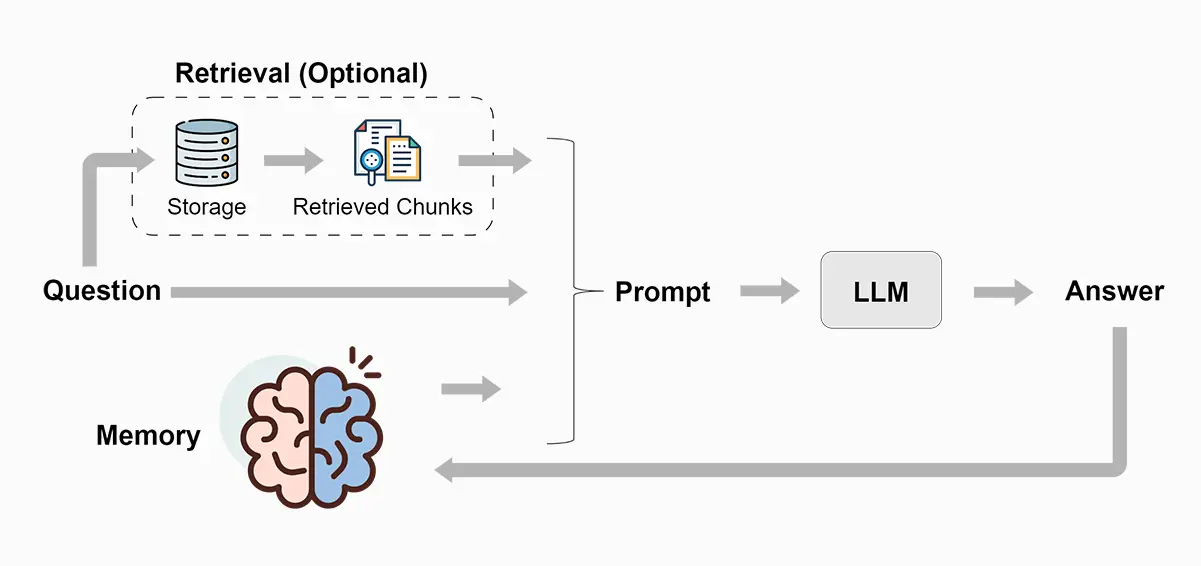

In LangChain, RAG stands for Retrieval Augmented Generation. It’s a technique that combines generative models, like large language models (LLMs), with retrieval mechanisms to enhance the accuracy and relevance of the generated information.

Retrieval

When you provide a prompt or context, the retrieval component searches for relevant documents or data points from external sources like databases, knowledge graphs, or previously processed information.

Embedding

The retrieved documents and your original prompt are converted into numerical representations called embeddings.

Guidance

The LLM uses the retrieved embeddings along with your prompt to inform its text generation process. This ensures the generated output is consistent with the retrieved information and relevant to the provided context.

Improved accuracy and relevance

The retrieved information acts as a factual backbone for the LLM, reducing the risk of generating inaccurate or irrelevant content.

Knowledge integration

Allows you to easily incorporate external knowledge sources into your LLM’s workflow, expanding its reach and capabilities.

Contextual awareness

The LLM is better able to understand the specific context of your request and generate responses that are more tailored and meaningful.

RAG can significantly enhance your chatbot’s capabilities by adding context awareness, improved accuracy, and deeper integration with external knowledge sources. When a user asks a question, RAG retrieves relevant documents like factsheets or official reports, allowing the chatbot to provide factually accurate answers with evidence-based citations. RAG can analyze past conversations and user profiles to personalize responses, tailoring future interactions to the user’s specific needs and interests.

Answering complex questions

By leveraging retrieved research papers, historical records, or technical manuals, the chatbot can tackle complex inquiries efficiently and accurately, even if they go beyond its basic knowledge base.

Guiding user exploration

When a user expresses interest in a specific topic, RAG can use retrieved resources to suggest relevant articles, videos, or additional information sources, enhancing user engagement and learning.

Multilingual communication

If your chatbot operates in multiple languages, RAG can assist with finding and translating relevant documents, enabling cross-lingual communication and knowledge sharing.

In this example, the system incorporates information from the PDF book into its knowledge base, expanding its potential for answering questions accurately. The retrieved context helps the LLM generate responses that are relevant to the specific question and the information available in the book. Retrieval improves the chances of producing factual and well-grounded responses, reducing the risk of generating inaccurate or misleading information.

This example demonstrates how RAG can effectively combine retrieval and generation to create more knowledgeable, contextually aware, and accurate responses, drawing on external knowledge sources to enhance the capabilities of LLMs.

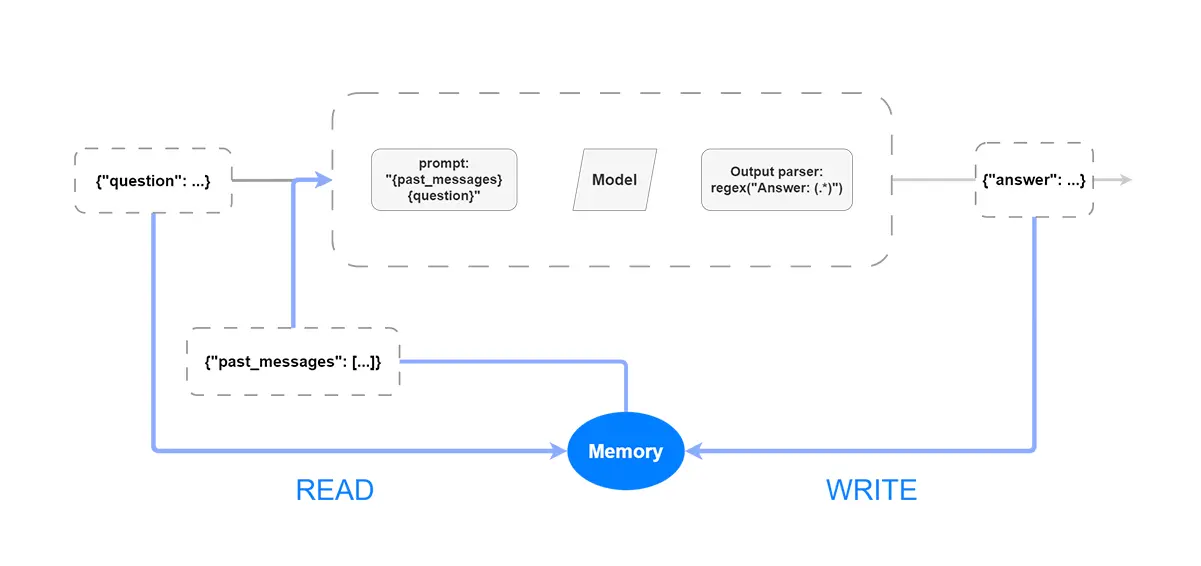

Memory in LangChain plays a crucial role in building intelligent and interactive workflows. It allows you to store and reuse information across different interactions, fostering context awareness and personalized experiences.

Context awareness

Remember past interactions and user preferences to deliver relevant responses and experiences.

Personalization

Adapt to individual users based on their stored information and preferences.

Efficiency

Avoid repetitive processing by reusing cached data and stored outputs.

Consistency

Maintain consistent responses and avoid contradictions across interactions.

Conversation Buffer

Stores recent chat messages, enabling context awareness and smoother conversation flow.

Conversation Knowledge Graph

Captures extracted entities and relationships for personalized and knowledge-based interactions.

Conversation Summary

Creates concise summaries of past conversations for easier reference and analysis.

Custom Memory Classes

Allows you to define your own memory stores to manage specific data types relevant to your needs.

Make your LangChain Chatbot more interactive and user-friendly by personalizing responses and remembering past interactions. By remembering the conversation flow, your chatbot can avoid awkward jumps or contradicting statements. Imagine discussing a movie then suddenly being asked about your favorite food! It allows for natural back-and-forth conversations, making the interaction feel more human-like and engaging.

In this example, memory enables context-aware conversations. The chatbot can remember past information and use it to create more relevant and engaging responses. BufferMemory is a simple type of memory that stores a limited history of messages. Memory is integrated into the chatbot’s processing using RunnableSequence, allowing it to access and update memory at different stages. Prompts play a crucial role in incorporating memory into the generation process. Saving responses to memory along with their corresponding inputs creates a rich context for future interactions.

In this blog post, we’ve explored the exciting potential of LangChain to build powerful and versatile chatbots. We’ve seen how LangChain simplifies conversation flows, enhances context awareness, and empowers developers to craft unique conversational experiences.

By leveraging retrieval-augmented generation (RAG) with Pinecone’s efficient embedding retrieval, we can push the boundaries of chatbot intelligence, enabling them to access and integrate information seamlessly. This paves the way for more engaging, informative, and human-like interactions.

Remember, LangChain is more than just a technical solution; it’s a gateway to crafting chatbots that are truly game-changing. With its flexibility and power, LangChain empowers developers to bring their chatbot visions to life, shaping the future of intelligent conversation.

So, are you ready to take your chatbot development to the next level? Start exploring LangChain and its potential to revolutionize your conversational AI!

Explore our AI Powered Chatbot Crafted Using LangChainJS, OpenAI, and Pinecone by our Team from Here: LangChain Chatbot

You can check out our LangChain Services as well.

1. Inroduction

2. Setting Up the Development Environment

– 2.1 Next.js

– 2.2 Pinecone

– 2.3 LangChain and OpenAI API Key

3. Understanding the Building Blocks

– 3.1 Prompt Templates

— 3.1.1 Example of Prompt Template in LangChain

– 3.2 Output Parser

— 3.2.1 Different types of Output Parsers in LangChain

– 3.3 Stop Sequence

— 3.3.1 Example of Stop Sequence

4. How To Enhance Your Chatbot’s Interactions

– 4.1 Use Function Schema

— 4.1.1 Key Components of Function Schema

— 4.1.2 Benefits of using Function Schema

— 4.1.3 Example of using Function Schemas for specific tasks in a chatbot

– 4.2 Use Runnable Sequence

— 4.2.1 Here’s an example of Runnable Sequence in LangChain

– 4.3 Use Runnable Map

— 4.3.1 How does Runnable Map Work

— 4.3.2 Benefits of Runnable Map

— 4.3.3 Example of Runnable Map

– 4.4 Use Retrieval Augmented Generation (RAG)

— 4.4.1 How does RAG work

— 4.4.2 Benefits of using RAG in LangChain

— 4.4.3 How can RAG be used in a Chatbot

— 4.4.4 Use Cases of RAG in Chatbots

— 4.4.5 Example of RAG

5. Memory

– 5.1 Memory

— 5.1.1 Why use Memory in a Chatbot?

— 5.1.2 Types of memory in LangChain

— 5.1.3 Benefits of using memory in Chatbot

— 5.1.4 Example of Memory

6. Conclusion